Updated: December 11, 2012

Last Monday, we discussed how certain SEO tactics (a.k.a. “black-hat” SEO) can get your site into a whole heap of trouble, and how these penalties can have devastating consequences for your business’ bottom line.

We outlined the first 5 of these ‘on-page’ SEO tactics you should absolutely avoid. In the interest of your time, we decided to break this into two separate posts.

Continue reading to learn the other 5 tactics you should avoid. This list is also helpful in identifying if any of these elements are on your site, or if any competitors are using any of these tactics. Being able to identify them will help you avoid a penalty, or worse, being totally banned from the search engines.

6. Using the “Phantom Pixel”

The phantom pixel is essentially an image that’s so small – only one pixel – that it can’t be seen by your site’s visitors. However, these microscopic images can used to link to other pages, or the “alt-img” tag can be used to stuff keywords you’re trying to target.

Like hidden text (…or anything viewable by the search engines but hidden from human visitors), search engines absolutely hate the phantom pixel. Having just one on any web page on your site can result in a penalty, or even a complete ban.

To see if your site or any competitor’s site has hidden images, all you have to do is use “Ctrl+a,” which highlights everything on the page.

7. Doorway pages

These are essentially low-quality pages that are automatically generated by software and are designed to rank well for as many keywords as possible. Many aggressive SEOs use these types of pages to funnel visitors into other pages designed to convert the visitor into a customer.

Creation of doorway pages often involves the use of two specific tools – one is a software program that ‘scrapes’ or copies content from other web pages or RSS feeds. This content is republished on the doorway page, which then links to the main sales page being targeted. The other tool, known as “Markov chain content generation” uses a special algorithm to combine words in a unique way. While hard for search engines to spot, this copy will look absolutely terrible to an actual person.

Therefore, do not use software to generate your site’s content. While it’s okay to use a system to “manage” your site’s content, it should be written by an actual person.

8. Meta & JavaScript Redirects

Redirects are commonly used to steer site visitors away from obsolete pages or dead links. When used in this context, they’re okay.

However, spammers often times use redirects in a way search engines hate. The typical strategy is to build a page stuffed with keywords but then redirect the visitor to a sales page that would not rank well otherwise.

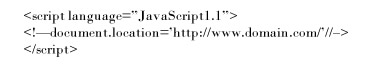

One way this is often done is to add a “meta refresh” to the <head> section of the HTML code. Here’s an example:

Using this code will redirect, or refresh the page by sending the visitor to a different URL. The number “1” in the code we use above refers to the number of seconds to display the old page before redirecting.

JavaScript redirects are another way to accomplish the same thing. While search engines may scan JavaScript for URLs to index, they will not process the code. JavaScript redirects users to a different page, but the search engines will ignore that redirect and index the code on the initial, keyword-stuffed page.

Here’s an example of a JavaScript redirect:

It’s important to note that redirects have many important uses from moving pages and rewriting URLs to changing domains. But since JavaScript and meta redirects have been so abused by spammers, it’s strongly recommended you use a 301 redirect if you need to send visitors to a new page.

9. Little to no unique content

The importance of unique content has been something we can’t stress enough. The last two big algorithm updates from Google, which affected thousands of websites, were largely driven by this issue. This content issue often affects e-commerce type sites that simply use product descriptions provided by the manufacturer. While not considered spam, the search engines will remove these pages since they do not want to display hundreds, or even thousands of nearly identical pages.

This concept is true for both organic and PPC pages. If your site is promoting an offer from an affiliate, the Quality Score can drop so low that you will need to pay extremely high costs to keep your ad pages active.

If your site is in an affiliate program or reselling products, develop product descriptions that are unique from what the manufacturer provides. In all likelihood, there are hundreds of other affiliate type pages using the same content. Separate yourself from the pack and reap the benefits over your competitors.

10. IP delivery (…or cloaking)

Considered one of the most complex and controversial of SEO strategies, IP delivery or cloaking involves serving one page to your human visitors while serving a different one to the search engines. Doing so essentially hides the real page, which is the one visitors will see, from the search engines.

However, cloaking also has some legitimate uses. Since search engines cannot index Flash content (…which human visitors love), webmasters may serve different content to the search engines so the page can be indexed. It’s easy to claim that providing content that the search engines can index benefits users. On the same token though, it’s easy to claim a setup like this is a loophole that’s ripe for exploitation.

Real briefly, when a visitor comes to your site, they’re identified through an Internet Protocol (IP) address. If you get online through cable Internet or DSL, you in all likelihood have a permanent IP address assigned to you. Search engine spiders have their own unique IP addresses as well. Some SEOs go to great lengths to identify the IP addresses used by search engine spiders, which allows them to identify if a visitor is the search engine spider or an actual human visitor.

If the IP address/number doesn’t match with their list of search engine IP addresses, the SEO/webmaster assumes the visitor is an actual human and serves up the page designed for human eyes, which often times includes graphics, JavaScript, Flash, etc. Conversely, if the IP address matches one they’ve identified as a search engine spider, then a text-only, keyword-rich page is served up.

Like hidden text and images, any time you serve different content to your human visitors, you’re asking for trouble. While search engines do make a few exceptions for cloaking, it’s best to pay it safe.

When discussing acceptable and unacceptable SEO practices, the debate often devolves in the white hat vs. black hat camps. In reality, there are a lot of grey areas.

The big question you need to ask is if your website is providing value to visitors. Remember, search engines are in the business of delivering the most valuable, relevant pages to their users. If they’re constantly delivering junk, people will quit using them. Therefore, if someone uses tactics that attempt to rank low-quality pages high in the search results, they shouldn’t be surprised when the search engine takes action to remove the offending site.

Remember, any strategy that sacrifices long-term, sustainable rankings for short-term gain is asking for trouble. Make sure your web pages offer value to your visitors while, at the same time, letting the search engines know what your pages are about.

Have you used any of these SEO strategies, whether intentionally or not, in the past?

If so, were you penalized? If you were, how long did it take you to recover?

Let us know in the comments section below or drop us a line on Facebook today!

Related Posts

10 ‘On Page’ SEO Tactics You Should Avoid At All Costs – Part I

Answer These 23 Questions to Understand What Google Looks For

Penguin Update Targets Link Schemes and Low-Quality Content

3 Steps You Should Take Before Linking to Another Site

Google Panda Update Causes Some Sites to Lose Traffic, Revenues